The Java Flight Recorder (JFR) is a powerful feature in the Oracle JDK. It allows you to record information about the Java runtime, and the application running in the Java runtime. When designing robots, it can be an invaluable tool. Robo4J has some integration with the Java Flight Recorder out of the box.

In this blog, I will use the JFR integration to simplify the calibration necessary to make use of the LaserScanner class.

Calibrating Servos

Step one is to calibrate whatever servos are involved in doing a scan. I have a pan servo and a tilt servo. Only the pan servo is actually involved in the LaserScanner class - it provides 2D scans. That said, I need for the tilt servo to be calibrated properly too, if nothing else to ensure that I get level scans. To calibrate the servos, see my Servo Calibration blog.

First Configuration

The second step is to configure reasonable defaults for the configuration file for the LaserScannerExample ($ROBO4J_HOME/robo4j-units-rpi/src/examples/resources/lidarexample.xml). The most important part is to copy the values from your calibrated pan servo in the first step to the laserscanner.servo entry. Also, ensure that you set the minimum acquisition time depending on the version of the Lidar Lite you have. The v1 requires at least 20 ms. The v2 and later should be okay with 2ms. For the rest of the settings, leave the defaults for now.

Doing Flight Recordings

The next step is to run the a few scans using the LaserScannerExample and look at the results.

First source the envionment variables:

First source the envionment variables:

source scripts/rpi/environment.sh

Next run the LaserScannerExample with the flight recorder enabled. We will connect over JMX, so you need to open the appropriate ports, for example:

sudo java -XX:+UnlockCommercialFeatures -XX:+FlightRecorder -Dcom.sun.management.jmxremote.port=7091 -Dcom.sun.management.jmxremote.rmi.port=7091 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=coffewlan -cp $ROBO4J_PATH com.robo4j.units.rpi.lidarlite.LaserScannerExample

Next, get the robo4j-tools repo for Robo4J, and follow the instructions to start Java Mission Control (JMC) with the Robo4J plug-in.

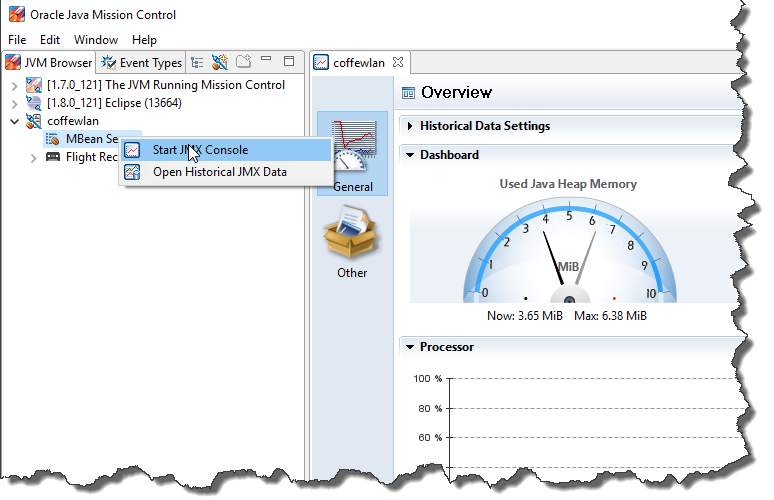

Create a connection to your RaspberryPi running the example. You can connect with the JMX console to see that the connection works.

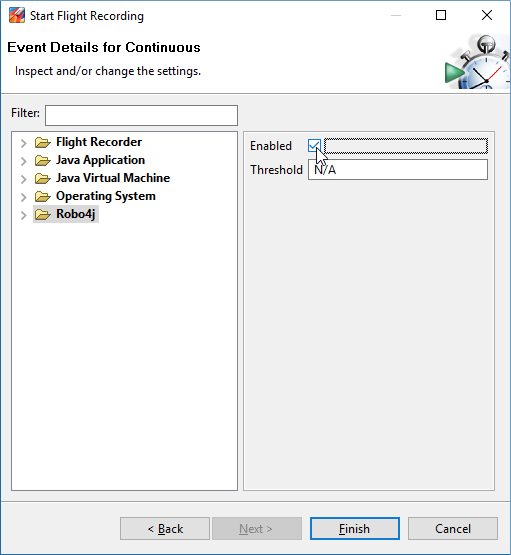

Next create a recording from Java Mission Control by right clicking on your connection and select “Start Flight Recording". You can use any recording template, just make sure to enable the Robo4J specific events in the last page of the wizard.

Click finish to start the recording. Once done, it will be downloaded to JMC automatically. Now, since you are using the Robo4J plug-in, the scans will be visualized for you. Open the Robo4J tab group, and select a scan to take a look at it.

If you suspect your servo is travelling too fast or slow (multiple sample points in the end with the same reading(s), weird artifacts in the end of a scan), adjust the angularSpeed. If you feel the laser do not get enough time to acquire, increase the minAcquisition time. Also, since we move the servo whilst acquiring, you may need to compensate the angular speed. Since I was lazy and didn’t have time to spend looking for a perfect physical model, there is a trim parameter to compensate (to make the left-to-right, and right-to-left scans align).

Below is a picture of a couple of individual scans on my robot without trim (simply shift- or control-click a few scans to render them simultaneously):

And the picture below is with trim set to 5.5:

Simply keep doing recordings and bisect your way to a proper trim value for your particular setup.