This blog post will show you how to quickly get started with Robo4J and will explain some key concepts.

Robo4J contains some key core “modules”. We will use the word “module” loosely here, as they are not quite yet JDK 9 modules. These modules are all defined as their own Gradle projects and have their own compile time dependencies expressed in Gradle.

When you build your own robot, you will normally have a dependency on the robo4j-core and the units defined for the platform you are using for your robot. For example, robo4j-units-rpi for the Raspberry Pi, or robo4j-units-lego for a Lego EV3 robot.

In this blog post I will use the provided Raspberry Pi

lcd example to show how to set up and run Robo4J on the Raspberry Pi. Miro will post a Lego example later.

| Module |

Description |

| robo4j-math |

Commonly used constructs as points, scans (for laser range scans), feature extraction and similar. Most modules depend on this module. |

| robo4j-hw-* |

A platform specific module with easy to use abstractions for a certain hardware platform. For example robo4j-hw-rpi, which contains easy to use abstraction for common off-the-shelf hardware from Adafruit and Sparkfun. |

| robo4j-core |

Defines the core high level concepts of the Robo4J framework, such as RoboContext, RoboUnit and RoboReference. |

| robo4j-units-* |

A platform specific module which defines high level RoboUnits that can be included into your projects. This modules basically ties the hardware specific module together into the Robo4J core framework, providing ready to use units that can simply be configured, either in XML or code. |

Note that the robo4j-hw-* modules can be used without buying into the rest of the framework. If you simply want to bootstrap the use of the hardware you’ve purchased, without using the rest of the framework, that is certainly possible. Hopefully you will find the rest of the framework useful enough though.

First thing you want to do is to pull the robo4j core repo:

git clone https://github.com/Robo4J/robo4j.git

Once git is done pulling the source, you will want to install the modules into the local maven repository:

gradlew install

Now you are ready to pull the source for the LCD example:

git clone https://github.com/Robo4J/robo4j-rpi-lcd-example.git

We will finally build it all into a runnable fat jar:

gradlew :fatJar

Now, to run the example, simply run the jar:

java -jar build\libs\robo4j-rpi-lcd-example-alpha-0.3.jar

If you are not on a Raspberry Pi, with an Adafruit LCD shield connected to it, then you can actually run the example on any hardware, by asking the LCD factory for a Swing mockup of the Adafruit LCD:

java -Dcom.robo4j.hw.rpi.i2c.adafruitlcd.mock=true -jar build\libs\robo4j-rpi-lcd-example-alpha-0.3.jar

(This particular example can actually be run without using Robo4J core at all; see my previous post for a

robo4j-hw-rpi version of the example.)

Now, let’s see how the example is actually set up. There are two example launchers for the project.

One is setting up Robo4J using an

XML configuration file, and

one sets everything up in Java. Both are valid methods, and sometimes you may want to mix them – this is supported by the builder. I will use the XML version in this blog.

In this example there are mainly two units that we need to configure. A button unit and an LCD unit. They are both actually on the same hardware address (Adafruit uses an MCP23017 port extender to talk to both the buttons and the LCD using the same I2C address). However, it is much nicer to treat them as two logical units when wiring things up in the software, and this is exactly how Robo4J treats them.

Here is the most relevant part of the

XML-file:

Note that the AdafruitLcdUnit and the AdafruitButtonUnit are provided for us, and they are simply configured for the example. Also note that they reside on the same hardware address, as expected. No code is changed or added for configuring these units. The controller unit is however ours, and it defines what should happen when a button is pressed.

The following snippet of source shows how to instantiate it all (focusing on the most relevant parts of the code):

We get ourselves a RoboBuilder, we add the configuration file as an input stream to the builder, and that’s it in terms of configuration. The builder will be used to instantiate the individual RoboUnits in the context of the overarching RoboContext. The RoboContext can be thought of as a reference to a “robot” (a collection of RoboUnits with (normally) a shared life cycle). It is currently a local reference (only in the local Java runtime), but once we get the network services up, you will also be able to look up remote RoboContexts.

Once we have the RoboContext set up, we start it, which will in turn start the individual RoboUnits. After that is done, we also provide a little start message to the LCD by getting a reference to the RoboUnit named “lcd”, and send it an initial LcdMessage.

The controller (which is not an off-the-shelf-unit) runs little demos on the LCD, and allows the user to navigate between the demos using the up and down buttons. Simply

go here for the source.

I will simply note that the most important part of building a custom unit is to override the onMessage method, and that this unit is somewhat different to most units, as it is actually synchronizing on an internal state, and will skip all buttons pressed whilst a demo is running.

Summary

This was a brief introduction to what the robo4j framework currently looks like. Note that we currently have changes lined up for the core which are currently not implemented, such as changing the threading model/behaviour for individual RoboUnits using annotations. Expect things to change before we reach 1.0. Also note that we currently have a very limited amount of units implemented. This will also change before we release a 1.0. We will not release a first version of the APIs until we have implemented a few more robots in the framework.

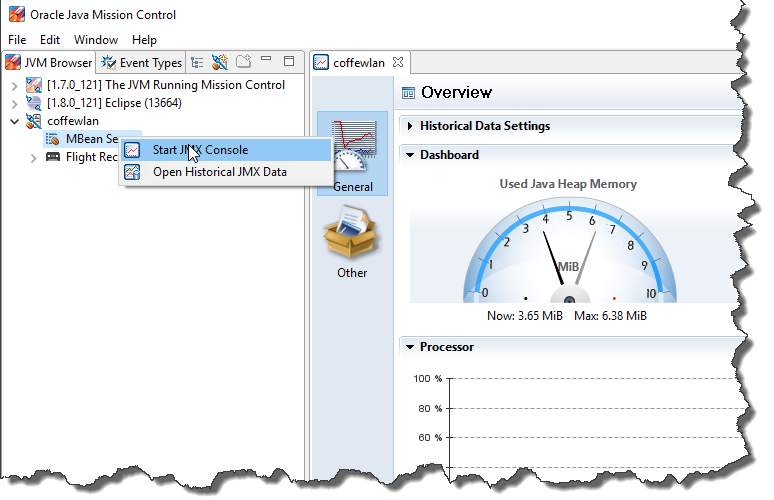

One of the robots being migrated to Robo4J right now is

Coff-E.

Hope this helps, and please note that this is all changing from day to day. Things will continue to change until, at least, after this summer. If you would like to add your own units, hardware abstractions or similar, feel free contact us at

info@robo4j.io. We’d be happy to get more help!

:)

/Marcus